Realism is a bad word. In a sense everything is realistic. I see no line between the imaginary and the real.

Realism is a bad word. In a sense everything is realistic. I see no line between the imaginary and the real.

– Federico Fellini

Each time a new generation of consoles is release, the graphics move up a notch. The leap from PS1 to PS2 was perhaps the largest, but the changes from PS3 to PS4 are no slouch either. What comes next? What will PS5 graphics be like?

That’s the question we’re here to answer. With the rate at which technology and graphics are progressing, we’re thinking we could make that next big leap when the PS5 releases. Photo-realistic graphics, anyone?

The Current State of Video Game Graphics

The term “Uncanny” or in the original German translation “Das Unheimliche” is a concept generally known to been Freudian in nature. It has to do with things that are both familiar and alien all at once. Things that we recognize, but not in the form we expect. Would it be so much of a stretch to say that video game graphics are “uncanny” in a number of ways?

It really depends on the game. We’ve been able to make huge strides forward in facial rendering and animation. Environments look spectacular, but people and characters have never looked better. Take a game like Hellblade: Senua’s Sacrifice, for example. Check out the video below:

This is an early tech demo of the amazing technology that went into Hellblade on the PS4. Having played the full game myself, I can safely say that this is the closest I’ve seen to what the PS5’s graphics could be like.

This is all based on new technology from Epic Games, developers of the Unreal Engine 4. This same engine is used in a lot of modern games, but Hellblade: Senua’s Sacrifice really showcases how realistic human characters can be.

Now, a game like Hellblade is the exception, not the rule. We still have many games on the PS4 that fall deep into the uncanny valley. Games like Mass Effect: Andromeda, and even acclaimed titles like Horizon: Zero Dawn can sometimes suffer from less than human appearances.

We’ve really nailed down realistic environments and physics, but lighting and believable humans still leave a bit to be desired in many games. Hopefully PS5 vs PS4 graphics will showcase a major difference in this regard.

Let’s examine the uncanny valley concept, and then move into the possibilities that we could see in the PS5’s graphics.

Close, But no Cigar: An Examination of The Uncanny Valley

One of the largest obstacles (beyond technology limitations we will discuss later in the article) in achieving photorealistic graphics, is creating people in games that look, sound, and act completely real. We’re close, but it isn’t perfect yet. The same goes for computer generated imaging, and for robotics.

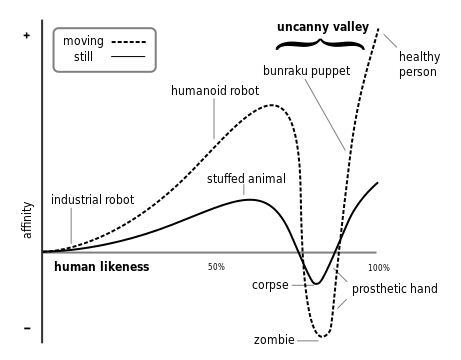

A graph representing the Uncanny Valley By Smurrayinchester [GFDL or CC-BY-SA-3.0], via Wikimedia Commons

Sigmund Freud described the uncanny as something that creates a repulsive response. Think of it like an odd feeling in your gut. He of course used a fancy term called “cognitive dissonance” but the idea is still there. It rubs you the wrong way to see something that looks and sounds human, but isn’t quite right.

This brings us to the concept of the “uncanny valley.” This hypothesis was formed in 1970 and presented by a robotics professor named Masahiro Mori. It seeks to map out the level of revulsion that occurs when an observer views certain things that are uncanny. The professor claimed that the closer we got to authenticity, the more we would find the small inaccurate elements disturbing.

The valley itself is a major dip in the graph where we reach levels below 50% familiarity. These include things like a prosthetic, a corpse, and even a zombie.

The effect is amplified if the observer is viewing something in motion too. This hypothesis is applied to games, robotics, and film in various ways. It represents the struggle for achieving an artificial version of the person in either a game, movie, or in robotic form that doesn’t cause the psychological feeling of uncanniness.

Recreating Reality: The Obstacles we Face

Back in 2013, the founder of Epic Game, Tim Sweeney, made a comment that probably sucked all of the air out of the room. He said: “Video games will be absolutely photorealistic within the next 10 years.”

An article by Tom’s Guide showcased some intriguing things about the photorealistic graphics by including input from major players in the graphics industry. Seeing as how Epic Games makes the most powerful and well known game engine in the industry, The Unreal Engine, he’s in a good spot to make a claim like that.

Henrik Wann Jensen at the University of California is a researcher of computer graphics who pioneered something called subsurface scattering. This allows a program to simulate how light hits and spreads across semi-translucent objects.

When posed with the concept of photorealistic graphics he said that it would be longer than 10 years to reach such a plateau. He brought up a concept called “Global Illumination” which would allow us to simulate how light behaves in the real world. We’re not there yet, and he doesn’t think we will be anytime soon.

To achieve something like Global Illumination, we would also need to master something called Ray tracing. This concept describes an algorithm that tracks how millions of individual light rays travel through an area and reflect off of the various surfaces. This one algorithm covers shadows, shading, and textures.

It is a step that many believe is required to master if we ever hope to achieve photorealism in our graphics. Ray tracing has already been around in both movies and in advertising where it is used to make a digital model of a product appear realistic These instances are programmed ahead of time though, whereas video games would have to do the same calculations in real time, a far more difficult task.

This represents one of the main problems with achieving photorealism: doing it in a video game. If you look at modern day films, you’ll see effects that border on what seems to be perfect, but these techniques are all employed prior to the moment you watch the film. A video game must be able to do this as it is played

This creates an issue with processing power. The current technology we have, even for PC gaming rigs, is not enough to handle an algorithm like ray tracing. The current solution is something called rasterization which allows us to achieve the graphics we see today. This is yet another algorithm (go figure) that takes three-dimensional shapes and renders them to be displayed on a two-dimensional screen.

This technique cannot simulate how things are in the real world, so it leaves a lot to the artists of a game to create those effects on their own within the game engine. If we take a popular game engine like Unity for example and look at how light behaves there, we are actually seeing the result of someone manually drawing those shadows and not a direct reaction to the light itself.

Currently everything from shadows, to textures, to color is done by hand. With ray tracing and another up and coming technique called photogrammetry, artists will have less work to handle and the graphics will be far more realistic as a result.

There’s still one more issue though, and it’s one that you wouldn’t think of unless someone mentioned it to you directly. I know I didn’t. I was reading an article on Extreme Tech about the diminishing returns on current generation consoles, and it got me thinking.

In the article, they use an example of polygon counts. During the early generations of video game graphics, it wasn’t unheard of for a character model to consist of, say, 60 triangles. The jump from 60 triangles, to say 600, resulted in a huge graphical jump. Going from 600 to 6,000 was certainly noticeable as well, but from 6,000 to 60,000 suddenly showed what looks like a minor change.

How is that possible? Wouldn’t a huge jump like that show a huge return? The answer is no, not much. Once you hit that many triangles and polygons, the level of detail becomes incredibly specific. Even massive changes only result in minor changes to the overall look. If we want photo-realism, we need to do much more than double, triple, or even quadruple the polygon counts. That’s going to be tough.

Steps The Industry is Taking Now to Reach This Goal

As it stands, there are steps in place that can help developers overcome these obstacles and continue to push the boundaries of what our current games can do in terms of visuals. We’ve discussed ray tracing, but that is still outside our realm of reach in gaming. Fortunately, The Astronauts, the company behind an amazing game called The Vanishing of Ethan Carter have brought a new technique to the forefront: photogrammetry.

A screenshot from The Vanishing of Ethan Carter Courtesy of Gamespot.com

This technique has been used in various fields for some time, but the above game was the first to really bring it into the world of gaming. It involves taking pictures of real life objects and settings. A lot of pictures, like hundreds, from every angle. In the past movies like The Matrix have used it to build realistic 3D models of their sets and Ethan Carter does something similar.

One screenshot of the game will show you how incredible the graphics are, and the best part is that PS4 is going to be getting it sometime in 2015. So, graphics like these are achievable now through this method. Of course, the game’s artists still had input of their own when it came to things that don’t exist in the real world, so it doesn’t put them out of business.

The current version of the Unreal Engine, the 4th iteration, also has developed technology to push the envelope while still taking into account the limitation on hardware that we face. Known as Sparse Voxel Octree Global Illumination or SVOGI for short, this technique is a variation of ray tracing.

It uses voxels, or 3D pixels that are shaped like cubes, to simulate the rays of light in a scene. These rays are built in “trees” from the voxels in ways that can be measured. This differentiates it from true ray tracing as those rays are one-dimensional and have no width to be measured like voxels.

We’ve Covered Light, What About the Rest?

Achieving true lighting in a video game is probably the most crucial step in reaching photorealism. There are still other fronts we need to push forward on though, and the industry knows this. Game engines like CryEngine and Unreal Engine 4 are pushing for more complex environments and objects that aren’t scripted.

These objects are programmed to react to your input like anything else would in the real world. You can throw them, knock them over, blow them up, and they will always react in the most realistic way possible. In addition, you have to consider particle effects such as dust clouds or other forms of moving weather.

Phil Scott, an employee of Nvidia, a major computer video card manufacturer that will most likely manufacture the ps5 graphics card, spoke out on the complexity of modeling human skin:

”Skin will accept light from the environment and then the light scatters around within the first few millimeters of flesh. Then it reemerges, colored by what it has encountered.”

And then of course you have eyes and hair. The former is incredibly hard to render realistically because the eyes themselves are a complex lens, and therefore light behaves very differently when reflected in them. Hair must flow and move realistically, not stay rigid as many older games have done.

PS5 Graphics: Photorealistic or Not?

That’s a hard question to answer. Many believe we won’t see graphics that are photo-realistic by 2020, but if one thing is certain, PS5 Graphics will be pretty close to this goal if modern advances are anything to go on.

What do you think? What kind of graphics will we see on the PS5? Let us know in the comments!

Related Articles: